This is part of a SQL Server Internals Plan Caching series. Be sure to read Kalen's first post on this topic.

SQL Server has been around over 30 years, and I’ve been working with SQL Server for almost as long. I’ve seen a lot of changes over the years (and decades!) and versions of this incredible product. In these posts I’ll share with you how I look at some of the features or aspects of SQL Server, sometimes along with a bit of historical perspective

In my previous article, I talked about SQL server diagnostics, including the various options that SQL Server has for reusing a query plan. We looked at three types of query plans: adhoc, prepared, and procedure. I ended the discussion with a look at an inappropriate reuse of a plan, which can happen when SQL Server applies parameter sniffing in the wrong situations. If a plan is based on an initial value that causes the optimizer to generate a plan appropriate for that value, and then the same plan is used for a different value, the plan may no longer be optimal.

So, what can we do when parameter sniffing is a problem? We can force SQL Server to come up with a new plan. Usually, we call the act of coming up with a new plan ‘recompiling’, but it probably should be called ‘reoptimizing’. However, most people use the term ‘recompile’, so that’s what I’ll use here.

If inappropriate use of parameter sniffing is a problem, a simple solution is to just tell SQL Server to come up with a new plan. For individual statements, such as with PREPARED plans that have been autoparameterized, we can add the RECOMPILE hint to a query. Using FORCED parameterized (discussed in the previous article), this query will be parameterized.

SELECT * FROM dbo.newsales

WHERE SalesOrderID < @num;

If we want to make sure we get a new plan every time we run this query, with potentially vastly different values for @num, we can add the RECOMPILE hint as shown:

SELECT * FROM dbo.newsales

WHERE SalesOrderID < @num

OPTION (RECOMPILE);

For stored procedures, we have three options. First, we can ascertain whether recompiling will actually help performance by executing the procedure with the RECOMPILE option:

EXEC get_sales_range 66666 WITH RECOMPILE;

This option will cause a new plan to be generated just for this one execution. It will not be saved and certainly won’t be reused. The usecount value shown in sp_cacheobjects (described in the previous post) for the procedure will not increase since the original plan is not being reused.

Second, if we find that executing WITH RECOMPILE helps, we could consider recreating the procedure with the RECOMPILE option, in which case it will never reuse the plan and the procedure won’t show up in the plan cache at all.

DROP PROC IF EXISTS get_sales_range;GO

CREATE PROC get_sales_range

@num int

WITH RECOMPILE

AS

SELECT * FROM dbo.newsales

WHERE SalesOrderID < @num;

GO

For my simple little procedure, using the WITH RECOMPILE option for the whole procedure might make sense. But if the procedure is more complex, it might not make sense to recompile the entire procedure because one statement is causing problems. So, the third option is to use the RECOMPILE hint for a statement within the procedure, so it looks like this:

DROP PROC IF EXISTS get_sales_range;

GO

CREATE PROC get_sales_range

@num int

AS

SELECT * FROM dbo.newsales

WHERE SalesOrderID < @num

OPTION (RECOMPILE);

GO

Using one of these RECOMPILE options can force SQL Server to come up with a new plan at your request. Now, we’ll look at when your SQL Server diagnostics come up with a new plan when you don’t request it, i.e. when does automatic recompilation of an existing plan occur?

Automatic recompile of a plan occurs in two types of situations:

- First, if the optimizer determines that the existing plan is no longer correct, usually because of a change in the object definitions, it will need to come up with a new plan. For example, if you have a plan for a query that selects from TableA, and you then drop several columns, or change datatypes of columns in TableA, SQL Server will recompile the query to come up with a plan that reflects the DDL changes.

- The second situation in which automatic recompile occurs is when SQL Server determines that the plan may no longer be optimal, due to a change in statistics. In most cases, if statistics on any of the columns or indexes have been updated since the last time the plan was compiled, it will be recompiled. But this leads to another question. When are the statistics updated? Statistics can be automatically updated when enough rows in the relevant columns have changed. How many is enough? We talk about that shortly.

By default, SQL Server will update the statistics automatically because of a database option that is ON by default. But if you are a database owner (or a SQL ‘sa’, which appears as the owner in every database), you can change the options. One of the options is called AUTO_UPDATE_STATISTICS and another is called AUTO_UPDATE_STATISTICS_ASYNC. The option AUTO_UPDATE_STATISTICS is ON in the tempdb database, so every new database inherits this option. When this option is ON, and the query execution engine detects changes to a sufficient number of rows while a query is being processed, execution will pause while the statistics are updated and then the query is recompiled. The other option, AUTO_UPDATE_STATISTICS_ASYNC, can potentially have less of an effect on query execution time because execution doesn’t pause, at the cost of using a possible suboptimal plan. With the second option, if the execution engine detects a need to update statistics, a background thread is fired off to do the update, and the main thread continues executing the query with the original statistics and the original plan. The next query that accesses the affected tables and sees the updated statistics will recompile the query, but it won’t pause and do the update of statistics in the middle of execution.

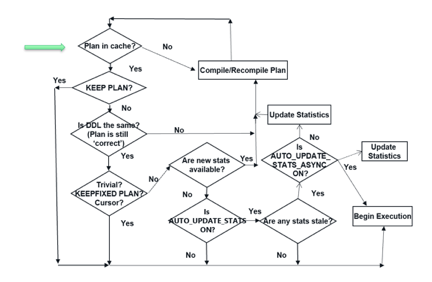

There are a few more situations as well as some query hints that control whether plans are recompiled or not, so I’ll show you a flowchart I’ll share a flowchart with you that I created for my training classes on SQL Server internals.

The arrow is where SQL Server starts processing your batch. It first checks to see if there already is a plan for your batch in cache, and if the answer is NO, follows the arrow to the right and compiles a plan. The plan is put in cache, and then SQL Server starts again. Yes, the plan should be in cache this time, so then it follows the arrow downward and asks if a hint called KEEP PLAN has been used. If YES, SQL Server starts executing the plan immediately and doesn’t do any further checks.

The next question is whether any DDL changes have been made. If no, it asks about several other situations that I’m not going to be able to talk about in this article. In fact, I’m really not going to step through every option here. I’ll leave that to you. But if you have any questions or confusions, feel free to ask them in the comments section here, or tweet me at @sqlqueen. I will point out the question at the far right: Is AUTO_STATS_ASYNC ON? Here, you can see that if the answer is YES, there are two actions. One branch just starts execution with the existing plan, and the other is the background thread that updates the statistics but then doesn’t do anything else. The next query will encounter the decision box in the middle “Are new stats available” and should answer YES, so the query will be recompiled.

The only other thing I’ll talk about is the question “Are any stats stale?”This basically means the statistics are out of date because too many changes have been made. So now we can talk about how many is too many.

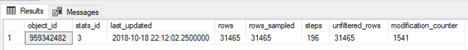

Although there are different values used for very small tables, for any table with more than 500 rows in it, prior to SQL Server 2016, statistics would be considered ‘stale’ when the number of changes to the column that the statistics were based exceeded 20% of the number of rows in the table. So, for a table of 1000 rows, this could mean 200 inserts, 200 updates, or 200 deletes. It could be 200 rows changes, or 5 rows updated 40 times each. SQL Server even gives us a function that reports how many changes have been made. You’ll need to look up the stats_id number for the statistics you are interested in, which would be the index_id if the statistics belong to an index. The stats_id can be found in the view called sys.stats. In my newsales table, I use this query to find that the stats_id for the index on the SubTotal column is 3.

SELECT name, stats_id FROM sys.stats

WHERE object_id = object_id('newsales');

Then I can use that value to look at the number of changes. Let me update some rows first:

UPDATE newsales(1541 rows affected)

SET SubTotal = SubTotal * 0.9

WHERE SalesOrderID < 45200

SELECT * FROM sys.dm_db_stats_properties(object_id('newsales'), 3);

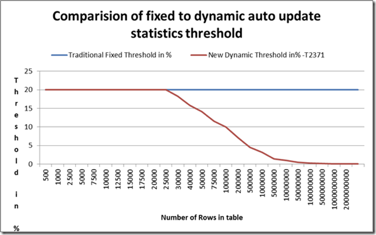

In fact, 20% is a BIG number. And for many tables, queries might benefit from updated statistics with far fewer than 20% of the rows updated. Starting in 2008R2 SP1, SQL Server included a Traceflag you could use to change the number of rows to be a sliding scale, as shown in the following graph:

Starting in SQL Server 2016, this new algorithm with the sliding scale is used by default, as long as you are in compatibility level 130 or higher.

Most automatic recompiles of query plans are due to changes in statistics. But as I mentioned above, that is not the only reason for a recompile. But since it is the most common, it can be very useful to be aware of when and how statistics are updated and make sure the statistics on your critical tables are updated often enough to make sure you get the best plans!

Analyze performance data automatically to perform SQL server diagnostics to resolve issues quickly and identify servers where performance degradation originates. Get started using Spotlight Cloud today: